RRAM and the AI Hardware Revolution

Why Resistive RAM could be the key to solving AI's biggest bottleneck and enabling the next generation of computing.

The Potential Surface is a blog supported by Orbital Materials

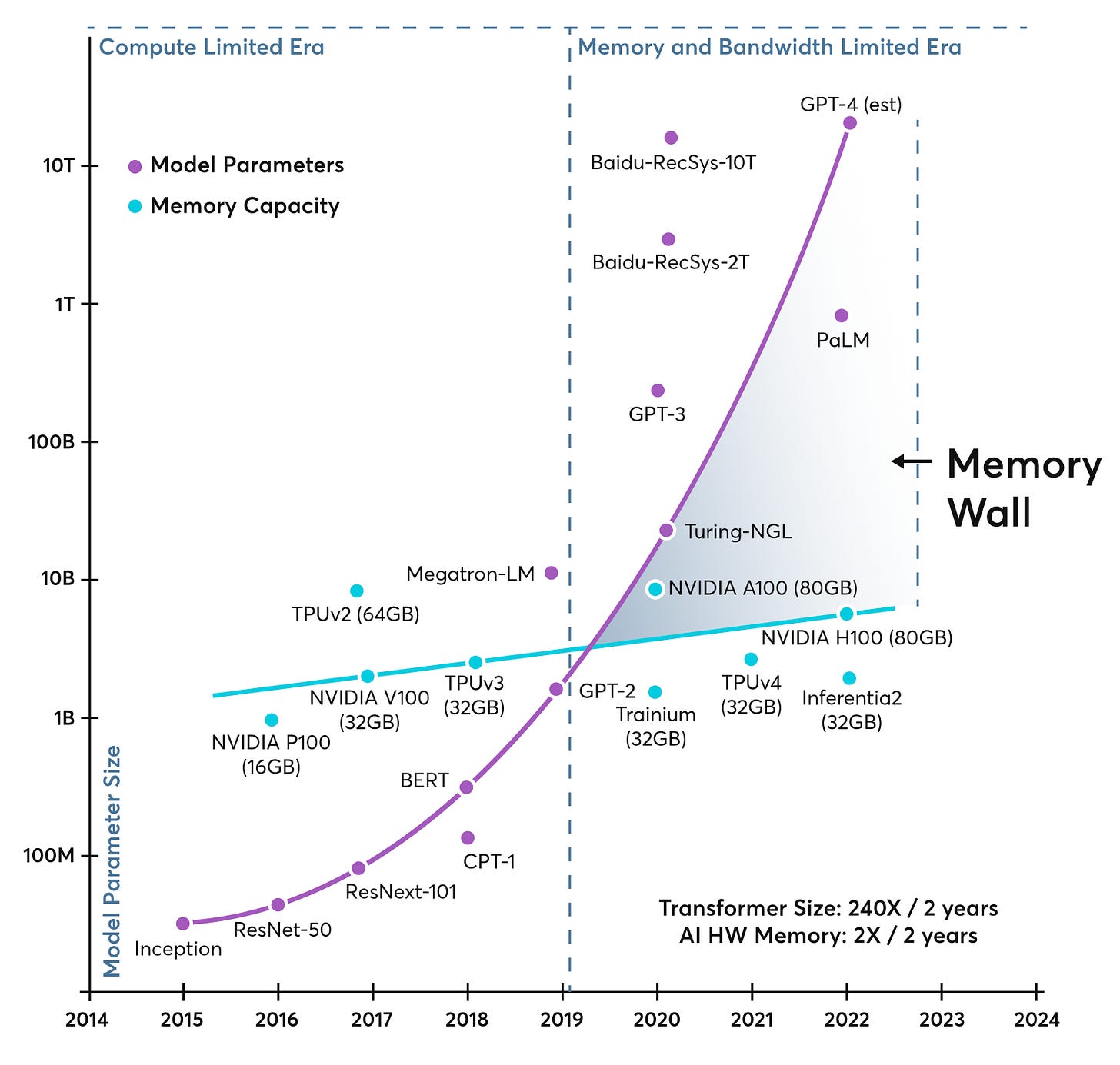

The memory and storage landscape within data centers is undergoing a seismic shift, driven by the insatiable demands of artificial intelligence (AI), machine learning (ML), and the ever-growing torrent of data. While processors have grown exponentially faster over the past decades, memory performance has lagged, exposing the limitations of traditional architectures, a challenge now referred to as the “memory wall.” While the industry has responded with faster clock speeds and wider bandwidth memory, these solutions are hitting their own physical limits.

This performance gap between processors and memory now forces even the most advanced chips to spend most of their time simply waiting for data, a reality that is becoming the single biggest roadblock to the future of AI.

The Current Hierarchy

The memory hierarchy works much like our own brain. At the top are the ultra-fast CPU caches, the processor's immediate thoughts. Just below that is the main memory, DRAM, which acts like our short-term memory. It holds all the data for currently running programs and gives it to the processor as needed. DRAM is very fast, but it's also power-hungry and volatile; just like our short-term memory, when the power goes off, the information is gone.

For information that isn't immediately needed, it's stored in the "long-term memory": Flash storage. This information is stored permanently because Flash is non-volatile. Another reason its a great long-term memory device is because it's so dense and cheap that it can hold upwards of 100 times more information than DRAM. The issue, however, with Flash is that it is very slow to read and write to, and it degrades with each update cycle.

This massive speed gap between DRAM and Flash further exacerbates the memory wall problem. DRAM needs a partner it can offload and reload information with that is much quicker than Flash, but that is also non-volatile and far more energy-efficient than DRAM itself.

Bridging the Gap with RRAM

To overcome these limitations, a new class of energy-efficient, persistent memory is emerging: Resistive Random-Access Memory (RRAM). Offering the best of both worlds, combining near-DRAM speeds with the non-volatility and efficiency of Flash, RRAM stands out as a promising next-generation candidate that could redesign the memory hierarchy itself and be the much-needed right-hand man to DRAM.

Beyond its core features of fast switching speeds, low power consumption, and non-volatility, RRAM could unlock revolutionary concepts that pave the way for more intelligent data centers, such as:

In-memory computing

Neuromorphic computing

Enhanced hardware-level security

How RRAM Differs from Conventional Memory Devices

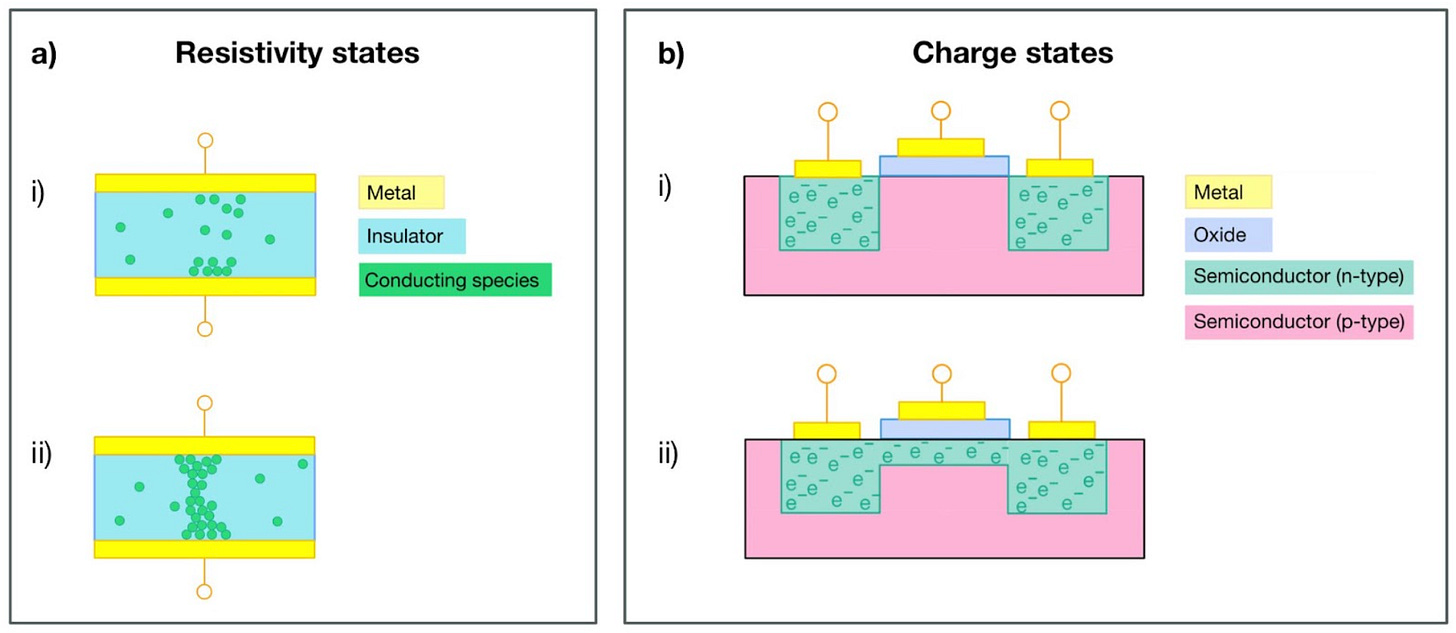

Training an AI model involves updating billions of parameters, which requires a staggering number of read/write cycles to memory. To store the 1s and 0s that represent this information, traditional memory works by physically trapping and moving electrons within the material. The ‘charge states’ in the figure below illustrates this concept.

There are two main types of these “charge state” memory devices: volatile memory (ie DRAM) and non-volatile memory (ie Flash). Volatile memory is fast to read and write, but requires continuous power to retain information. Non-volatile memory can store data without power, but is slower to write and degrades quickly if frequently updated.

RRAM takes a different approach; it encodes information by changing the resistance of a material using brief voltage pulses. This triggers the movement of oxygen atoms, forming or dissolving tiny conductive filaments that represent a 0 or 1. This process is illustrated in the ‘resistivity states’ shown in the figure below.

Perhaps counterintuitively, moving atoms to change the resistance requires less energy than shifting and trapping electrons to change the charge state. This new switching mechanism allows RRAM to switch states in nanoseconds, which is up to 1000x faster than traditional non-volatile Flash memory. Once the resistance has been changed, the device retains its state without needing continuous power, unlike volatile DRAM. Furthermore, this mechanism allows RRAM to change states many more times than Flash memory without facing the same material degradation.

What this means is RRAM offers a way to write data more efficiently, store information with lower power consumption, and last longer through the countless read and write cycles!

Can RRAM be practically implemented?

For any new hardware to be adopted, it must be compatible with existing semiconductor infrastructure. RRAM devices use a simple metal–insulator–metal (MIM) structure that integrates well with CMOS technology already used in data centers.

Furthermore, RRAM can be fabricated using standard processes like atomic layer deposition and sputtering, enabling cost-effective scaling without major retooling.

RRAM utilises abundant oxides like HfO₂ and TiO₂ for the insulating layer. This helps keep both fabrication costs and environmental impact remain low. The variety in available oxides also allows manufacturers to fine-tune the properties of RRAM devices for different applications and prioritise specific characteristics.

Novel Applications of RRAM

In-memory computing

The benefits of RRAM go beyond just improving the memory hierarchy, but fundamentally changing the rules of computing. Its architecture can enable in-memory computing, where operations occur directly inside the memory.

If operations like the matrix multiplications essential for AI happened directly where the data lives it would overcome some of the need for constant shuttling of data between a processor and memory which leads to the memory wall. In-memory computing would be a massive leap forward in both speed and energy efficiency, and could pave the way for the next generation of artificial intelligence.

Neuromorphic computing

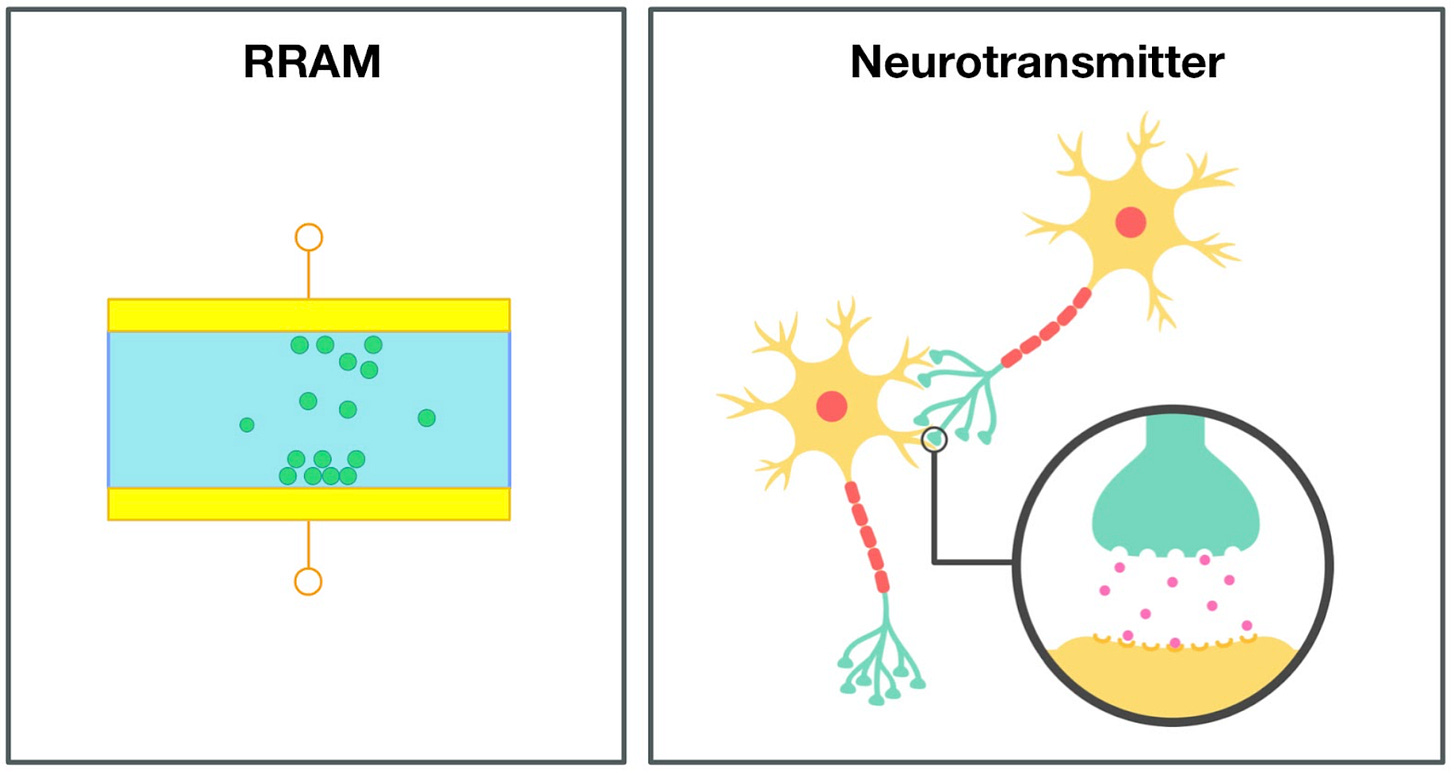

RRAM is also a candidate for neuromorphic computing, a field dedicated to building hardware that mimics the brain’s synaptic behaviour. While traditional memory is like a simple on/off light switch (a 1 or a 0), a single RRAM cell can act like a dimmer switch, holding many distinct resistance levels. This multi-state capability is the key to creating artificial synapses, allowing us to build brain-inspired chips that can learn and process information in a more natural and efficient way.

Security

RRAM's physical structure also opens the door to a new frontier in hardware security.

When a RRAM cell stores a 1 or a 0 by forming a tiny, unique filament of atoms the exact path this filament takes is random and physically impossible to reproduce, giving each chip a built-in, unclonable fingerprint.

This unique physical identity can be used to create powerful encryption keys that are part of the hardware itself, not just the software. This offers a robust defense against physical hacking, reverse engineering, and cloning, making systems fundamentally more secure.

Challenges and Next Steps

While RRAM shows strong potential for efficient, sustainable, high-performance AI hardware, several hurdles must be overcome before remain before widespread adoption. These issues relate to the understanding of the fundamental physics and manufacturing of the device.

Interface and Material Stability

A primary issue is the physical stability of the memory cell itself, specifically at the interface between the metal electrode and the oxide switching layer. The memory works by moving atoms to create or break a filament. If this process isn't perfectly controlled, atoms can drift or react in unintended ways. Any instability could cause the resistance of the cell to change over time, leading to data corruption. Approaches such as adding a thin metal layer to act as an oxygen reservoir show promise for preventing unwanted oxygen movement during switching, helping to enhance long-term stability and reliability [29].

Understanding and Manufacturing Consistency

While the basic principles of how RRAM device work are well known, the precise physics of the atomic switching mechanism are still debated. This lack of a complete understanding is complicated by inconsistencies that arise during the fabrication process. To overcome these challenges, researchers are using a combination of advanced materials simulation and direct observation with techniques like in-situ Transmission Electron Microscopy (TEM) to build a deeper understanding and improve the design of these novel devices.

Environmental Variability

For any memory to be viable, it must work flawlessly under a wide range of conditions. Currently the performance of RRAM chips can be too sensitive to changes to the operating temperature. A commercial memory product must be stable from a cold start to the peak temperatures a data center fluctuates through to be considered reliable.

Final Thoughts

The relentless growth of data and the computational demands of AI are accelerating the adoption of next-generation technologies.

RRAM stands out not just as a faster, more energy-efficient memory option that could be placed strategically within the memory hierarchy, but as a platform for rethinking computing itself from in-memory acceleration to secure hardware and neuromorphic systems. While challenges remain around reliability, variability, and large-scale deployment, rapid progress is being made. Each breakthrough brings us closer to scalable, energy-efficient, faster AI infrastructure and innovations like RRAM offer a critical path forward, helping data centers evolve as compute demand continues to rise.

Thanks so much for reading! If you found this interesting, please consider sharing it and letting us know what you think.

The Surface Potential is written by Materials Scientist and Writer Annabella Wheatley. You can also find me on Twitter/X

Super interesting!

Great read